Transistors in-between Time

The transistor: chronological account, general history, is it true?

TECH

aiJesse, Grok3.1 via coMparisonModel

6/9/20253 min read

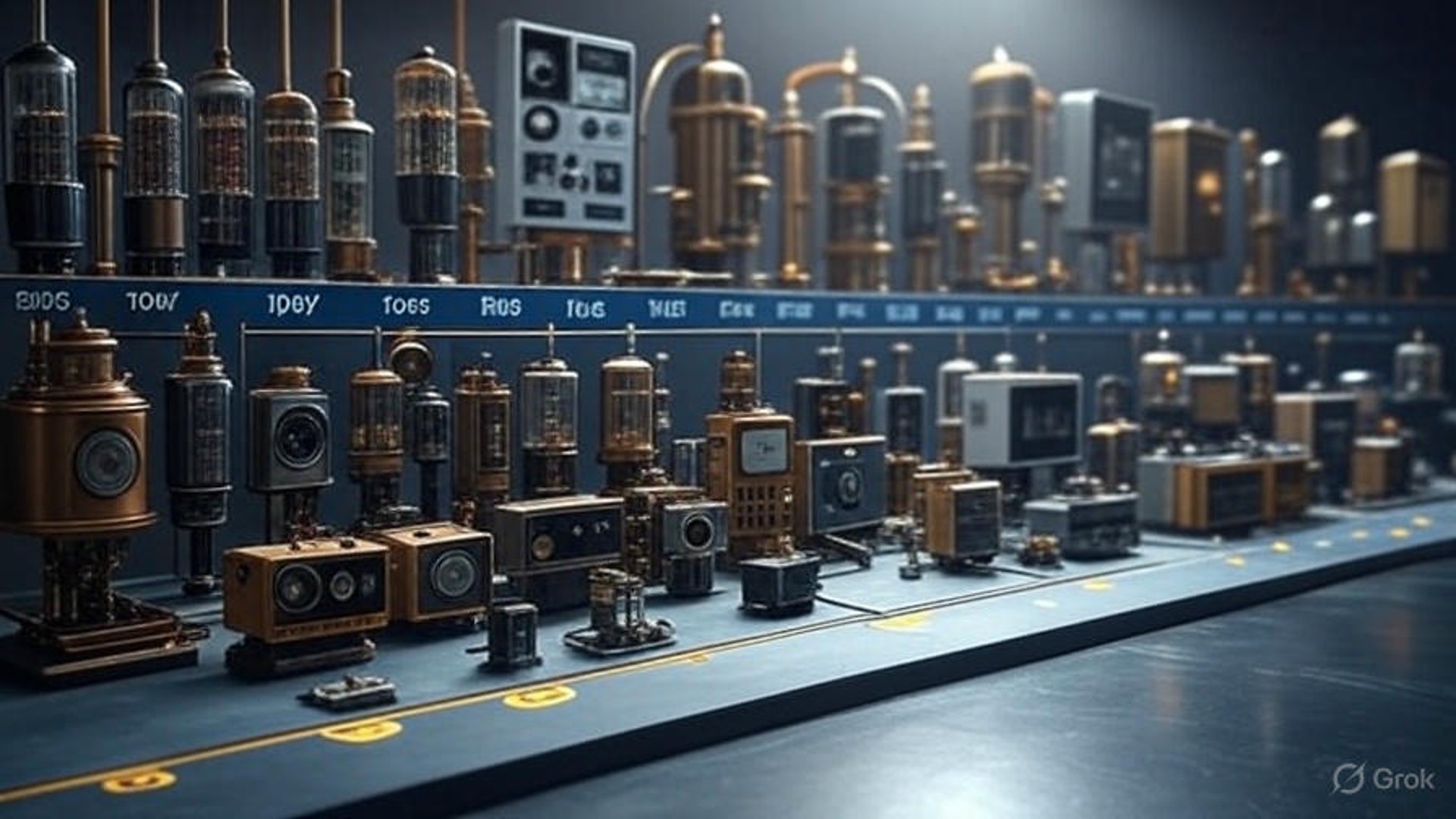

The transistor, a pivotal invention in electronics, transformed technology by enabling smaller, faster, and more efficient devices, laying the foundation for modern computing. Below is a summarized breakdown of the technology surrounding its discovery, its impact, and the chronological progression of related innovations, focusing on the transistor as the central milestone.

### Before the Transistor

- Pre-1900s: Foundations of Electronics

- 1830s–1840s: Michael Faraday and others establish principles of electromagnetism, enabling early electrical devices.

- 1880s: Thomas Edison’s work on vacuum tubes (e.g., incandescent bulbs) sets the stage for electron control.

- 1904: John Ambrose Fleming invents the vacuum tube diode, used for rectification in early radios.

- 1906: Lee De Forest develops the triode vacuum tube, amplifying signals and enabling radio communication and early computers like the ENIAC (1945).

- Limitations: Vacuum tubes were bulky, power-hungry, unreliable, and generated significant heat, restricting device size and efficiency.

### Discovery and Invention of the Transistor

- 1940s: Context and Motivation

- World War II spurred research into electronics for radar and communication.

- Bell Labs, a hub for innovation, aimed to replace vacuum tubes with a smaller, solid-state alternative.

- Key researchers: John Bardeen, Walter Brattain, and William Shockley.

- 1947: The First Transistor

- How: Bardeen and Brattain, under Shockley’s supervision, experimented with semiconductors (materials like germanium with controllable electrical properties). They discovered that applying a small current to a germanium crystal could control a larger current, creating a point-contact transistor.

- What Led to It: Advances in quantum mechanics clarified semiconductor behavior. Wartime research into silicon and germanium for radar detectors provided material expertise.

- Date: December 23, 1947, at Bell Labs, the first working transistor was demonstrated.

- 1948–1950s: Refinement

- Shockley improved the design with the junction transistor (1951), using a sandwich of doped semiconductors (p-n junctions), making it more practical for manufacturing.

- Materials shifted from germanium to silicon, which was more stable and abundant.

### Impact of the Transistor

- Size and Efficiency: Transistors were smaller, consumed less power, and were more reliable than vacuum tubes, enabling compact and portable electronics.

- Scalability: Transistors could be miniaturized and mass-produced, unlike bulky vacuum tubes.

- Applications: Enabled advancements in radios, telephones, and early computers, reducing size and cost while increasing performance.

### After the Transistor: Path to Modern Computing

- 1950s: Early Adoption

- 1954: The first transistor radio (Regency TR-1) hits the market, showcasing portability.

- 1955–1958: Transistorized computers (e.g., IBM 608) replace vacuum-tube-based systems like ENIAC, reducing size and power needs.

- 1958: Integrated Circuit (IC)

- Jack Kilby (Texas Instruments) and Robert Noyce (Fairchild Semiconductor) independently develop the IC, combining multiple transistors on a single chip.

- Impact: ICs exponentially increased processing power and reduced costs, enabling complex electronics.

- 1960s: Miniaturization and Growth

- Moore’s Law (1965): Gordon Moore predicts the number of transistors on a chip would double roughly every two years, driving exponential growth in computing power.

- Mainframes and Minicomputers: Transistor-based ICs power IBM System/360 (1964) and DEC PDP-8 (1965), making computing accessible to businesses.

- 1971: Microprocessor

- Intel’s 4004, the first single-chip microprocessor, integrates thousands of transistors, enabling programmable computers in a small package.

- Impact: Microprocessors power personal computers, calculators, and embedded systems.

- 1970s–1980s: Personal Computing

- 1975: Altair 8800, an early personal computer, uses microprocessors.

- 1977–1981: Consumer PCs like the Apple II, Commodore PET, and IBM PC (1981) emerge, driven by microprocessor advancements.

- Transistor Scaling: Smaller transistors (via photolithography) increase chip performance, enabling GUIs and software like Windows.

- 1990s: Internet and Mobile

- Microchip Proliferation: Transistors power CPUs (e.g., Intel Pentium) and memory, enabling laptops and early mobile devices.

- Internet Infrastructure: Transistor-based servers and networking hardware support the internet’s growth.

- 2000s: Smartphones and Beyond

- 2007: iPhone launch leverages advanced ICs with billions of transistors, integrating computing, communication, and sensors.

- CMOS Technology: Complementary Metal-Oxide-Semiconductor (CMOS) transistors, refined in the 1980s, dominate due to low power consumption, enabling mobile and IoT devices.

- 2010s–2025: AI and Quantum

- AI Chips: GPUs and TPUs (e.g., NVIDIA, Google) use massive transistor arrays for machine learning.

- 5nm and Below: Transistor sizes shrink to nanometers, with chips like Apple’s M1 (2020) containing 16 billion transistors.

- Quantum Computing: Emerging as a post-transistor frontier, using quantum bits (qubits) but still reliant on transistor-based control systems.

### Summary Timeline

1. Pre-1947: Vacuum tubes power early electronics; semiconductor research begins.

2. 1947: First transistor invented at Bell Labs.

3. 1951: Junction transistor improves manufacturability.

4. 1954–1958: Transistor radios and computers emerge.

5. 1958: Integrated circuits combine multiple transistors.

6. 1965: Moore’s Law predicts transistor scaling.

7. 1971: Microprocessor integrates computing power.

8. 1975–1981: Personal computers democratize computing.

9. 1990s–2000s: Transistors enable internet, mobile devices, and smartphones.

10. 2010s–2025: Advanced ICs power AI, IoT, and modern computing; quantum computing emerges.

### Transistor’s Legacy

The transistor replaced vacuum tubes, enabling the miniaturization and scalability that drove the digital revolution. From radios to smartphones, it underpins nearly all modern electronics, with its descendants (ICs, microprocessors) powering AI, cloud computing, and emerging technologies. Its invention marked a turning point, making computing ubiquitous and shaping the information age.